Making maintainable browser end-to-end tests doesn’t have to be a target for three engineers in a week-long sprint. You can have a few meaningful tests finished today.

There are lots of tools available to help you get started quickly. The most efficient of these now include using AI models to bootstrap your tests. Today we’re going to show you the value in using AI-based tools to get started with end-to-end testing.

Playwright is the best end-to-end testing tool for browsers on the market, and that’s why we’ll start by focusing on Playwright’s MCP server.

Playwright also recently released support for agents, which we’ll cover in a future blog post.

MCP matters more for end-to-end tests

It’s likely that you’re already using an AI model to help you write unit and integration tests alongside your implementation code. Personally, I find that extra agents or MCP servers aren’t really necessary for cases where we can easily provide all of the context that an AI needs ourselves - like a few files that we can point to.

However, in the case of end-to-end tests, pointing to your test file or your implementation code won’t get you as far. To write good unit tests, the AI needs to understand the structure of your implementation. To write good end-to-end tests, the AI needs to understand the structure of your running application. In the case of Playwright and the browser, this implies either the HTML of a running web page or a similar representation like an ARIA snapshot.

Model Context Protocol (MCP) is the best way to provide that kind of context to an AI model today.

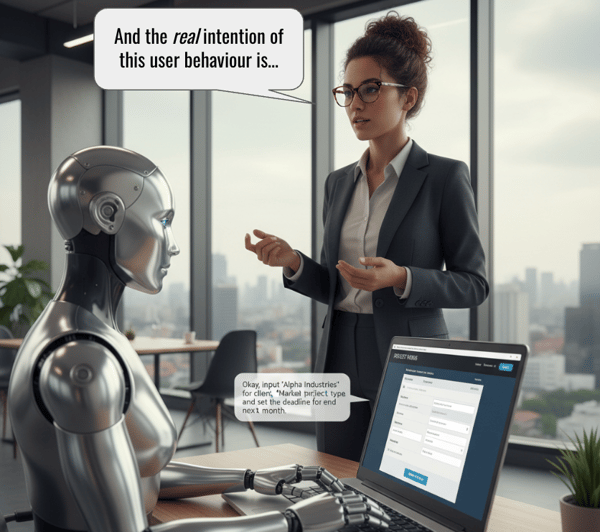

By giving a model the ability to view different parts of a web application on your behalf and read the structure of different pages, the AI has a much better ability to produce meaningful tests with well-formed locators.

Luckily, Playwright already has great support for MCP.

Bootstrapping your test

Garbage in, garbage out.

The content of the prompt you give your AI will heavily affect the quality of the outcome. In order to achieve decent results, we need to do better than “test my login flow”!

A great prompt for creating an end-to-end test has three components:

- Environment setup (location, authentication, test data)

- Explanation in plain English of the steps involved in your testing scenario

- System prompt telling the AI how to use the MCP server and what your success criteria are

Let’s break down each of these components.

Environment setup

Playwright’s MCP server needs a small amount of configuration to be able to interact with your application the same way your users would. Normally the most important part of this is providing some kind of authentication state.

The Playwright MCP server doesn’t have support for the projects or storage states that we normally set up in our Playwright config. However, you can provide a storage state file to your Playwright MCP server that contains authentication credentials.

npx @playwright/mcp@latest --isolated --storage-state .auth/api-user.jsonIn our projects, we have scripts that help us create and delete these MCP users and their authentication files on demand.

Don’t forget to configure your MCP server config to then use this updated command with your created storage state file.

We have more information on how to manage authentication state and set up your MCP server in our Playwright tutorial.

We always add this information as part of the test creation prompt along with any other crucial information about what your environment looks like.

# Environment setup

- The website under test is at https://endform.dev

- You're already logged in as a test user

- The test user is a member of the "Endform" organization etc.Scenario description

Ideally, the scenario description is where you spend most of your effort. You are the one who possesses the expertise related to how your application works and what a meaningful test would mean for you.

We highly recommend creating these as markdown files that stay in your code repository alongside your tests. This has even become a convention that the new Playwright Agents use!

Try to keep your specifications short yet specific. Make sure that they’re written in a way that anyone in your team or your company could understand.

# New team activity is visible in the activity log

- Navigate to the dashboard

- Navigate to the activity section

- Verify that one of the elements shows "you signed up"

- Click the "Create a new team" button

- Fill in the team name and click the "Create team" button

- Verify that one of the elements in the activity log shows "you created a new team"These description files won’t just help you make the first iteration of your test now, but they will help retain context around the meaning of your test as you improve it over time.

System prompt

We also need to instruct our AI what success looks like to us and what we want our tests to look like.

Prompts like these are great at inserting best practices.

Things like using modern locators, using test.step to group multiple actions when they’re semantically one step, and using meaningful assertions from the start.

A good one will reduce how much you need to do to take a first iteration of a test to something shipable.

My advice is to not write your own system prompt, but to copy or adjust system prompts created by people like the Playwright framework maintainers. We’ve used Debbie O’Brien’s prompt, which has worked well for us.

Bringing it all together

When we bring these elements together, we get a prompt that looks like this:

# Test Generation with Playwright MCP

Generate comprehensive Playwright tests for one scenario by following a systematic exploration and testing workflow.

## Process Overview

You will be given a testing scenario. Your task is to:

1. **Explore First** - Use Playwright MCP tools to navigate and understand the website

2. **Generate Tests** - Create a well-structured TypeScript test using `@playwright/test` for that one scenario

... more details from the system prompt...

# Environment setup

- The website under test is at https://endform.dev

- You're already logged in as a test user

... more details from the environment setup...

# Scenario description

- Navigate to the dashboard

- Navigate to the activity section

... more details from the scenario description...When we use well put together and thought through prompts like this, you’ll be surprised how good the first iterations of your end-to-end tests can become.

Should I ship it?

Absolutely! Once you’ve reviewed it.

One way to help your review would be to think about it in two parts.

First, we review the code in the script.

Is it easy to understand? We often find that scripts created by the AI are overcomplicated - and we spend time after the AI’s first draft trying to make them as simple and straightforward as possible.

Does it follow best practices? We normally tweak a few locators according to our liking and often adjust the way that steps are created and labeled.

But more importantly, using Playwright MCP frees up more time for us to focus on the intent of the test.

It’s worth taking some extra time here to make sure that the script’s actions accurately reflect the meaning of your specification. And more importantly, now that you have the test script and the specification in front of you, do they accurately reflect the behavior that you expect your users to have?

If you thought that the MCP server was great at making your first iteration, it’s also pretty sharp at improving tests based on your requirements. It’s often worth going through an extra iteration or two of your test specification and updating your test before you ship it and promptly forget most of what you’ve learned during the process.

How this changes things

Writing your first end-to-end test, as well as writing your seventh or your eighth end-to-end test, has never been easier. If you learn to use tools like Playwright’s MCP server, you’ll start to understand that the cost of creating tests has been greatly reduced. Ideally, this should make it possible for you and your team to write end-to-end tests both more frequently and with higher quality.

Your mindset can change from “Writing tests is slow and tedious” to “Getting to baseline is fast, refinement is where I add value”.

Give it a try and see how it works for you! Meaningful end-to-end tests do wonders for product stability and the psychological safety of engineers who ship code often.

Other notes

- The Playwright MCP server repository

- We have a complete Playwright tutorial showing you how to set up a full production ready end to end testing suite with Playwright.

- Endform, our platform, is a managed service that can run your Playwright tests reliably and quickly as you scale your suite.