Generate tests with Playwright MCP

In this guide, we will use the Playwright MCP to generate tests for your application.

Setting up the Playwright MCP Server

Section titled “Setting up the Playwright MCP Server”In this part of the tutorial, we will be using the Playwright MCP server.

We will use Cursor for the purposes of this tutorial, but you could use any IDE or CLI tool that supports MCP in its place.

Be sure to check out the installation instructions in the Playwright MCP repository to correctly set up the Playwright MCP server before continuing.

For example for Cursor, your mcp.json file could look like this:

{ "mcpServers": { "playwright": { "command": "npx", "args": [ "@playwright/mcp@latest" ] } }}Authenticating with the Playwright MCP Server

Section titled “Authenticating with the Playwright MCP Server”At the time of writing, the Playwright MCP server doesn’t have support for the projects or storage states that we set up in our Playwright config in the previous step.

You can achieve something similar by using the following flags to the MCP server:

--isolated keep the browser profile in memory, do not save it to disk.--storage-state <path> path to the storage state file for isolated sessions.Our mcp command with these arguments could look like this:

npx @playwright/mcp@latest --isolated --storage-state .auth/api-user.jsonIt’s just that at the moment, our projects are configured to create and delete users during the course of a suite run, so that user won’t exist!

Let’s add a script to help us create and remove users for the MCP server when we need them.

// import existing user creation and deletion functions

const scriptArg = process.argv[2];

if (scriptArg !== "create" && scriptArg !== "delete") { console.error( `Error: Invalid argument "${scriptArg}". Expected "create" or "delete".`, ); process.exit(1);}

// create or delete user based on script argimport { createUser, deleteUser } from "./setup-utils";

const scriptArg = process.argv[2];

if (scriptArg !== "create" && scriptArg !== "delete") { console.error( `Error: Invalid argument "${scriptArg}". Expected "create" or "delete".`, ); process.exit(1);}

// Can you come up with a way of getting the baseURL from the playwright.config.ts file// instead of hardcoding it here?const baseURL = "https://endform-playwright-tutorial.vercel.app";

if (scriptArg === "create") { await createUser(baseURL, "mcp");} else if (scriptArg === "delete") { await deleteUser(baseURL, "mcp");}Now we can make an mcp user by running:

pnpm mcp-user createAnd delete it by running:

pnpm mcp-user deleteDon’t forget to update our MCP server configuration for our new user.

{ "mcpServers": { "playwright": { "command": "npx", "args": [ "@playwright/mcp@latest", "--isolated", "--storage-state", "/full/path/to/your/project/.auth/mcp-user.json" ] } }}Generating our first test

Section titled “Generating our first test”Now that we have an MCP user saved to a file and our MCP server configured to use that storage state file when running the browser, we are in a good position to start generating tests that require authenticated users.

When using an AI in tandem with an MCP server, it’s a good idea to take the time to craft a good prompt to help your AI as much as possible when you’re trying to generate tests.

Here we will be inspired by Debbie from the Playwright team’s prompts to get started:

Test generation background

Section titled “Test generation background”Start with the requirements we have on the LLM:

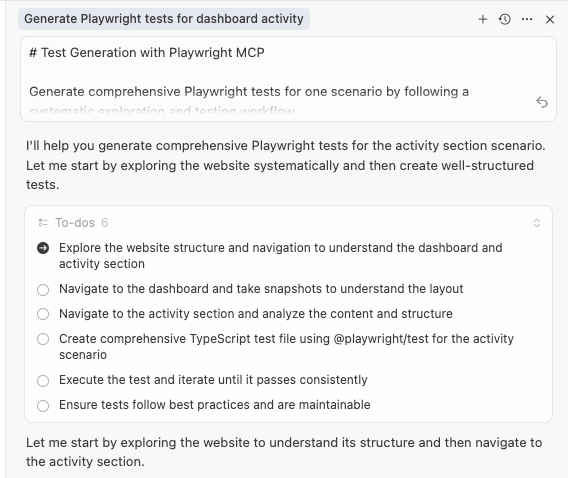

# Test Generation with Playwright MCP

Generate comprehensive Playwright tests for one scenario by following a systematic exploration and testing workflow.

## Process Overview

You will be given a testing scenario. Your task is to:

1. **Explore First** - Use Playwright MCP tools to navigate and understand the website2. **Generate Tests** - Create a well-structured TypeScript test using `@playwright/test` for that one scenario3. **Validate** - Execute test and iterate until it passes consistently4. **Refine** - Ensure test follows best practices and is maintainable

## Critical Requirements

- **Always explore the website first** - Never generate tests based solely on scenario descriptions- **Use systematic exploration** - Navigate, take snapshots, and understand the application flow- **Focus on one scenario** - Generate tests for the specific scenario provided- **Save to tests directory** - All generated test files go in the `tests/` folder- **Iterate until passing** - Run tests and fix issues until they pass consistently

## Success Criteria

- Tests use accessibility-first locators (getByRole, getByLabel, etc.)- Tests follow proper structure with describe blocks and test steps- Tests include meaningful assertions that reflect user expectations- Tests are resilient and maintainable- All tests pass consistently when executedSetup information

Section titled “Setup information”Then include some information about our setup

# Setup information- Our website is available at https://endform-playwright-tutorial.vercel.app- You are already logged in as a temporary userThe test scenario

Section titled “The test scenario”Finally, we should provide a detailed description of the test scenario we want to generate tests for.

# Test scenario- Navigate to the dashboard.- Navigate to the activity section.- Verify that one of the elements in the activity section is "you signed up".- Verify that one of the elements in the activity section is "you created a new team".Feed all these bits together to the prompt, and see what the AI generates!

Here’s an example of what it could look like in cursor:

Here’s a generated test that I got back from the AI. Can you spot any major flaws that we need to fix?

import { expect, test } from "@playwright/test";

test.describe("Activity Section", () => { test("should navigate to dashboard activity section and verify user activities", async ({ page, }) => { await test.step("navigate to the dashboard", async () => { await page.goto("/dashboard"); await expect(page).toHaveURL("/dashboard"); await expect( page.getByRole("heading", { name: "Team Settings" }), ).toBeVisible(); });

await test.step("navigate to the activity section", async () => { await page.getByRole("link", { name: "Activity" }).click(); await expect(page).toHaveURL("/dashboard/activity"); await expect( page.getByRole("heading", { name: "Activity Log" }), ).toBeVisible(); });

await test.step("verify activity section contains expected elements", async () => { await expect(page.getByText("Recent Activity")).toBeVisible();

const activityList = page.getByRole("list"); await expect(activityList).toBeVisible(); });

await test.step("verify 'you signed up' activity is present", async () => { const signupActivity = page.getByRole("listitem").filter({ hasText: "You signed up", }); await expect(signupActivity).toBeVisible();

await expect(signupActivity.getByText("You signed up")).toBeVisible(); await expect(signupActivity.getByText("just now")).toBeVisible(); });

await test.step("verify 'you created a new team' activity is present", async () => { const teamCreationActivity = page.getByRole("listitem").filter({ hasText: "You created a new team", }); await expect(teamCreationActivity).toBeVisible();

await expect( teamCreationActivity.getByText("You created a new team"), ).toBeVisible(); await expect(teamCreationActivity.getByText("just now")).toBeVisible(); });

await test.step("verify both activities are present in the activity log", async () => { const activityItems = page.getByRole("listitem"); await expect(activityItems).toHaveCount(2);

// Verify both texts exist on the page await expect(page.getByText("You signed up")).toBeVisible(); await expect(page.getByText("You created a new team")).toBeVisible(); }); });});import { expect, test } from "@playwright/test";

test.describe("Activity Section", () => { test("should navigate to dashboard activity section and verify user activities", async ({ page, }) => { await test.step("navigate to the dashboard", async () => { await page.goto("/dashboard"); await expect(page).toHaveURL("/dashboard"); await expect( page.getByRole("heading", { name: "Team Settings" }), ).toBeVisible(); });

await test.step("navigate to the activity section", async () => { await page.getByRole("link", { name: "Activity" }).click(); await expect(page).toHaveURL("/dashboard/activity"); await expect( page.getByRole("heading", { name: "Activity Log" }), ).toBeVisible(); });

await test.step("verify activity section contains expected elements", async () => { await expect(page.getByText("Recent Activity")).toBeVisible();

const activityList = page.getByRole("list"); await expect(activityList).toBeVisible(); });

await test.step("verify 'you signed up' activity is present", async () => { const signupActivity = page.getByRole("listitem").filter({ hasText: "You signed up", }); await expect(signupActivity).toBeVisible();

await expect(signupActivity.getByText("You signed up")).toBeVisible(); // Just now might not always be the matching time string - let's not check for it });

await test.step("verify 'you created a new team' activity is present", async () => { const teamCreationActivity = page.getByRole("listitem").filter({ hasText: "You created a new team", }); await expect(teamCreationActivity).toBeVisible();

await expect( teamCreationActivity.getByText("You created a new team"), ).toBeVisible(); // Just now might not always be the matching time string - let's not check for it });

// The extra step is a bit unnecessary, would be a shame if this test failed because more activities were added });});Otherwise, it was a pretty good test - we’ve got solid, modern locators and it’s labelling steps in a an understandable way.

Generating more tests

Section titled “Generating more tests”Here are a few more prompts you can try out to generate more tests (don’t forget to add the rest of the setup & background information from the previous steps):

Change the password

Section titled “Change the password”# Test scenario- Navigate to the dashboard.- Navigate to the general section.- Check what my current email is and make a copy of it.- Navigate to the security page.- Change the password to "newpassword123".- The current password is "testpassword123".- Sign out.- Sign in with the new password and the email we copied earlier.- Verify that we are logged in.Purchase a subscription

Section titled “Purchase a subscription”# Test scenario- Navigate to the dashboard at /dashboard.- Check that the current plan is "Free".- Click the "Change Plan" button.- Choose the "Plus" plan.- Complete the purchase of the "Plus" plan.- Verify that the current plan is now "Plus".Modify user name

Section titled “Modify user name”# Test scenario- Navigate to the dashboard at /dashboard.- Navigate to the general section.- Change the user name to "John Doe".- Navigate to the team settings section.- Verify that the users new name is displayed in the team members list.Change the email

Section titled “Change the email”# Test scenario- Navigate to /.- (sign out of the current user, this is not part of the test for later, but we need to have a clean new user here to not interfere with other tests)- sign up as a new user with a randomly generated email and password "testpassword123".- Navigate to the general section.- Change the email to a new randomly generated email.- Sign out.- Sign in with the password "testpassword123" and the new email.- Verify that we are logged in and on the team settings page.- verify that the new email is displayed on the general page in the email field.Invite a new user

Section titled “Invite a new user”# Test scenario- Navigate to the dashboard at /dashboard.- Navigate to the team settings section.- Copy the name/email of the currently only team member.- Fill in the Invite Team Member email field with a new randomly generated email.- Click the "Invite" button.- The application will console.log the invitation id in the format [inviteState] {id}.r- Sign out- Sign up with the new email and password "testpassword123".- Verify that we are logged in and on the team settings page.- Verify that there are two team members in the team members list.- Verify that the new team member's name/email is displayed in the team members list.List of bugs we found and fixed in the course of generating these tests

Section titled “List of bugs we found and fixed in the course of generating these tests”Just by creating this section and generating more tests for this application, we discovered several bugs in the application itself that were preventing the tests from working. In real world scenarios, this is why making tests is a great exercise. We won’t force you to learn the intricacies of this application and Next.js to be able to write your tests. Instead, here’s a list of bugs we fixed to give you an idea of the kinds of problems that you might encounter when creating tests like these for your own website.

- User activities weren’t always created - race condition

- Users couldn’t not be deleted when activities existed, foreign key constraint

- The user menu wouldn’t collapse on click

- Updating the user name wouldn’t update the user menu name icon

- Updating the user name wouldn’t update the user menu name team members list

- Not possible to update the email without updating the name

- Hydration errors on the choose plan page

Other classic problems with the tests that the AI generated:

- Strict mode violations / more than one element on the page that matches a locator

- Adding manual timeouts to tests instead of crafting good assertions

- Hardcoding urls instead of using relative paths

- Not using Playwright

test.stepto label steps in the test & excessive comments

Areas for improvement for our test setup at the moment

Section titled “Areas for improvement for our test setup at the moment”It’s been fantastic to generate a few tests using this method. When creating this tutorial, I was able to go from one test to ten tests in the space of just an hour or two, but that also should give us a little space to reflect over what we’ve done.

At the moment:

- Have lots of duplicated selectors in tests where better practice would be to have page object models so that we can reduce the amount of places we need to update selectors when changes are needed.