Prepare yourselves, we will be here for a while

End-to-end testing your web application isn’t something you do for a day or for a week. It’s a process you gradually adopt, until it becomes a vital part of how you ship software. Playwright is the best tool out there for end-to-end testing in a reliable and efficient way - but even the best tools used in the wrong way can have disastrous outcomes.

We believe that a great Playwright setup will pay for itself many times over in the long run. You don’t want to feel like a slow and flaky test suite is making you less productive as a software engineer. Quite the opposite. We want a suite like this to be speeding us up!

What bad setups feel like

If you feel like you are slowly losing trust in your end-to-end testing suite, it could be because of your setup. Here are some classic signs:

- Flakes due to other tests interfering with the same test data / user state

- Tests that fail on retries because of dirty state from previous runs

- Tests that need an excessive amount of time to run due to many UI based setup steps

- Previously solved test failures that pop up in other tests

If engineers don’t believe that test suite failures indicate that the application is actually broken, they will normally keep retrying the suite for hours before actually debugging the issue. What a waste!

Here is our greatest advice for creating great Playwright setups, collected over years of running Playwright in the wild.

API-driven test data

The single most important thing that you can do for your Playwright setup long term, is to have the ability to create test data on demand from an API. Let’s talk about why this matters.

Here’s your typical first Playwright test:

import { test } from '@playwright/test';

test('login', async ({ page }) => {

await page.goto('https://example.com/login');

await page.getByRole('textbox', { name: 'Username' }).fill('admin');

await page.getByRole('textbox', { name: 'Password' }).fill('password');

await page.getByRole('button', { name: 'Login' }).click();

await page.waitForURL('https://example.com/dashboard');

});Two weeks later we decide that we want to also test the checkout.

import { test } from '@playwright/test';

test('checkout', async ({ page }) => {

await page.goto('https://example.com/login');

await page.getByRole('textbox', { name: 'Username' }).fill('admin');

await page.getByRole('textbox', { name: 'Password' }).fill('password');

await page.getByRole('button', { name: 'Login' }).click();

await page.waitForURL('https://example.com/checkout');

await page.getByRole('textbox', { name: 'Card number' }).fill('1234567890123456');

await page.getByRole('textbox', { name: 'Expiration' }).fill('12/25');

// other checkout steps and assertions

});Three weeks later we add three more tests to test the team invite flow, the logout flow and the delete user flow.

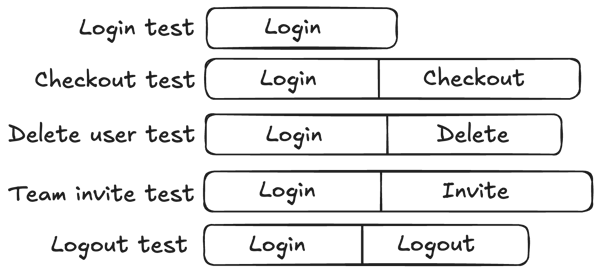

We’re now spending more than half of our time testing the login flow in our test suite. As we begin to need more data associated with our users, or things like several users to test collaboration, the proportion of time that we spend just setting things up instead of meaningfully testing our application completely takes over.

The cheapest way around this is to continue to use the UI, but at least to be able to share the initial state between tests.

This is normally done with a setup project in Playwright combined with the storageState option.

import { defineConfig, devices } from "@playwright/test";

export default defineConfig({

use: {

storageState: ".auth/user.json",

},

projects: [

{

name: "setup",

testMatch: "setup.spec.ts",

},

{

name: "main project",

testIgnore: ["setup.spec.ts"],

dependencies: ["setup"],

use: {

...devices["Desktop Chrome"],

},

},

],

});In our experience this is a good quick fix but it doesn’t hold as we start to scale our suite. Already now with the delete user test, it’s likely that we will accidentally delete our user while one of the other tests is still using it. Instead, learning to create and use API endpoints to create more useful kinds of test data will make your suite much easier to extend.

A common way of using API endpoints like this are through fixtures.

import { test } from '@playwright/test';

import { createE2EUser, createSubscription } from './utils';

export const testWithNewUser = test.extend<{

newUser: void;

}>({

newUser: [

async ({ baseURL, page }, use) => {

// Calls the API to create a new user

const { cookies, user } = await createE2EUser();

// Calls the API to create a pro subscription for the user

await createSubscription(user, { plan: 'pro' });

await page.context().addCookies(cookies);

await use();

},

{ auto: true },

],

});

testWithNewUser('check pro features', async ({ page, newUser }) => {

// test that pro features work as expected

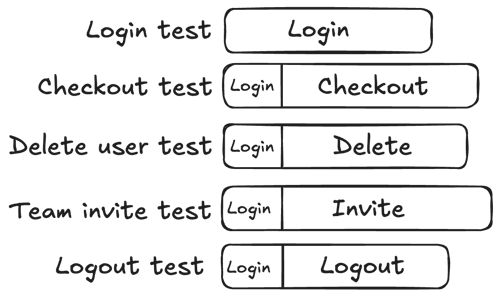

});When you start to use an API more extensively for creating test data, your suite will start to feel more like this:

The key here is the existence of the API endpoints that make it possible for engineers to mix and match test data in order to spend more time writing meaningful tests and less time debugging UI that has already been tested. Using this kind of setup won’t just make your test suite feel more reliable, but it should also make it considerably faster.

Propagating shared learnings

Once you start to scale your test suite beyond four or five tests, you will start to find that even with decent API endpoints for creating test data on demand, you still find yourself repeating the same steps and fixing the same problems in many places.

Let’s highlight some of the most common pitfalls in this space.

Stable environments with fixtures

One random Thursday, your test suite started failing because a third party advertising provider’s servers were down. Very frustrating, since there was nothing wrong with the product and the problem was completely out of your hands.

But you’re good at reading Playwright’s documentation on best practices, so you added a few intercepts for the third party provider.

await page.route('**/api/fetch_data_third_party_dependency', route => route.fulfill({

status: 200,

body: testData,

}));

await page.goto('https://example.com');Two weeks later, the same thing happens again and your suite’s failing. Why the deja vu?

Problems like these that affect your test suite globally are great to fix at a fixture level, instead of within individual tests. A common pattern we like to use is a “mother fixture”.

export const test = base.extend<{

ignoreThirdPartyDependency: void;

}>({

ignoreThirdPartyDependency: [async ({ page }, use) => {

await page.route('**/api/fetch_data_third_party_dependency', route => route.fulfill({

status: 200,

body: testData,

}));

await use();

}, {

auto: true,

box: true, // don't report this as a separate step in traces and reports

}],

});If all of our tests use or extend from this base or “mother” fixture, then it means that we have a single place to add knowledge around how our environment works.

import { test } from 'mother-test.ts';

test('normal test', async ({ page }) => {

await page.goto('https://example.com');

});Base fixtures like this are also a great place to add helper functions that can be used by tests that need them.

Reproducible steps with page objects

One common cause of flaky tests that we see are dependencies between Playwright steps that aren’t explicitly declared.

await page.getByRole('button', { name: 'Buy product' }).click();

await page.waitForRequest('https://example.com/api/products/1');Since it’s not exactly clear how long the click action takes, we might not be waiting for the expected request at the right time.

A great way of fixing this is using Promise.all:

await Promise.all([

page.getByRole('button', { name: 'Buy product' }).click(),

page.waitForRequest('https://example.com/api/products/1'),

]);Now the ordering doesn’t matter! As long as both things happen we can move on.

Now imagine that we’ve just figured that out, but buying the product is something we already do in 15 tests. We sure can do a lot of copy-pasting, but that doesn’t mean that the next person to write a test will remember to do the same thing.

Page object models are a popular way of creating an abstract representation of pages and the actions that can be taken on them.

export class ProductPage {

constructor(private page: Page) {}

async buyProduct() {

await Promise.all([

this.page.getByRole('button', { name: 'Buy product' }).click(),

this.page.waitForRequest('https://example.com/api/products/1'),

]);

}

}The most popular reason behind using them is to avoid duplicating locators for elements across many tests. That’s great, but I think that the most important reason to use page object models is to be able to share knowledge about our expectations on pages across tests, and there’s much more that describes the state of the world than a locator.

Wrapping up

It’s easy to write very simple tests that are harder to maintain in the long run. Early on, it feels like a burden to have to create a sophisticated setup for just a few tests. But what starts as a minor inconvenience becomes a productivity killer that will erode trust in your testing strategy over time.

So work on stability from day one. Invest in API driven test data and learn to use fixtures and page objects correctly early on. We have found that good setups using these methods haven’t just helped us ship faster, but also helped us to discover and fix many application bugs. End-to-end testing isn’t just a symbolic act. We are firm believers that developing your application and test suite in tandem meaningfully improves your product.

Start with the foundations. Your future self will thank you.

Endform is an end-to-end test runner for Playwright tests. If you’re interested in running your e2e tests faster than anywhere else, try running your suite with us!