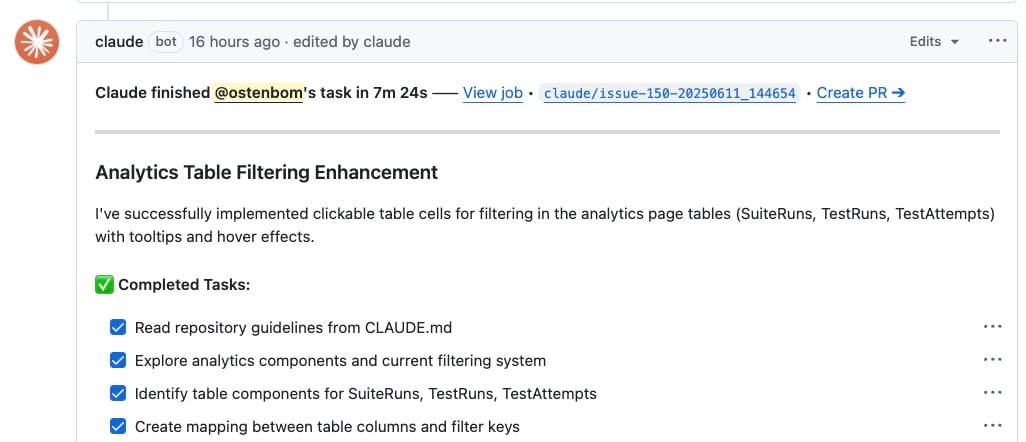

Do you remember your first “oh my god” moment when using ChatGPT to generate code? You might be in for a little déjà vu. We completely crushed our “commits to main” in a day high score - the key? Claude Code GitHub Actions. A new way to improve your velocity by kicking off coding tasks in the background. Incredibly powerful, with some pretty important caveats - here’s our take.

I’m already heavy into cursor, what do I need this for?

Then you’ll probably love this. People have been saying for a while that having an AI agent write code is a bit like having a junior software developer. This changes the way we can work with that junior software engineer - from constantly holding its hand, to being able to tell it to do something, and come back later and give it feedback.

The power comes from two things:

- The Economics: You’re paying direct API costs for the Claude model plus standard GitHub Actions runner time. It’s incredibly efficient.

- The Workflow Integration: Because it’s a GitHub Action, you can trigger it from almost anywhere. A new issue is created. A specific label is added. A comment is posted on a PR.

This opens up entirely new ways of working. Imagine a sprint planning session where, instead of just creating tickets, your team collaborates on writing detailed prompts inside issues. By the time the meeting is over, Claude has already opened a pull request with a first-draft implementation for each ticket. We barely had to context switch to get started.

We followed the Anthropic documentation to get started. Using the /install-github-app Claude agent command made it pretty straightforward.

Cursor also has “background agents” in beta - anthropic aren’t the only ones who understand the value in this.

First failures: flying too close to the sun

Our first major pitfall was not giving the AI guardrails. We just gave it vague problem descriptions and told it to have fun. No wonder the results weren’t great.

In many cases:

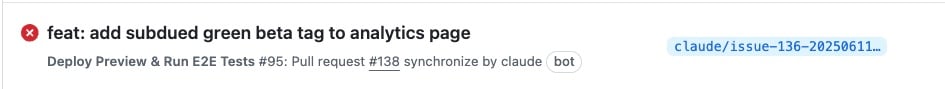

- The formatting didn’t match the rules that are applied automatically on save in our editors.

- The types failed to compile.

- The unit tests didn’t pass, or weren’t updated to match the change in functionality.

You wouldn’t ask a coworker for a review on a pull request with failing checks, so why should your AI junior engineer be any different?

Give Claude the same tools you have, and you can expect the results to be much better.

A few ways to do this:

- Make sure your dependencies are installed in the github action.

- Have a

CLAUDE.mdfile in your repository that describes the conventions you have in your repository (the dashboard app uses next 14 with the app router etc.). - Allow Claude to run the tools it needs for fast integration

Here’s a copy of our run Claude code step in our GitHub action. We found that we needed to add the allowed_tools option here, and that using .claude/settings.json wasn’t as effective

- name: Run Claude Code

id: claude

uses: anthropics/claude-code-action@beta

with:

allowed_tools: "Bash(pnpm run format:fix),Bash(pnpm run test:unit run),Bash(pnpm run tsc)"

anthropic_api_key: ${{ secrets.ANTHROPIC_API_KEY }}Last words of wisdom here: watch a full Claude Code Github Actions run live. It’s incredibly verbose and hard to read (1000s of lines), but I caught two setup errors that it pointed out and ignored that were making the results worse. Set yourself up for success by taking some time to set it up.

The siren’s call: respect your code

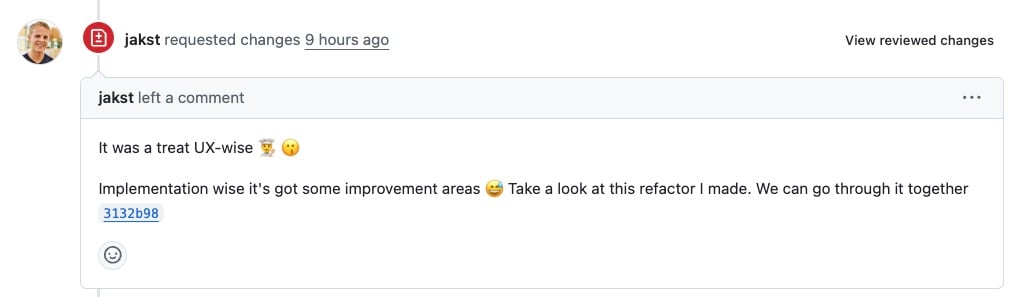

Even with perfect guardrails, you’re still flying with one leg, three fingers, and a left eye. You should treat the AI’s output as a first draft. Your junior AI engineer needs feedback and sometimes it’s better to just sit down and pair with it instead of giving it vague instructions.

The code review process is suddenly more important than ever! You’re not just checking for typos; you’re validating the entire approach.

The holy grail: end to end tested preview infrastructure in pull requests

With so many of these powerful new workflows, what we’re looking for is confidence. Confidence that the code looks good, is maintainable and well tested. Confidence that the product does what it’s meant to do, and works as well as it did last week.

We believe that preview infrastructure in pull requests, is how you achieve this with the tightest, fastest iteration loop. Don’t second guess that it works the way you think it will - open up a live deployed copy of the code and validate your assumptions!

The best way to validate your assumptions over time is to codify them as end-to-end tests. Your tests can describe the behaviour that your users expect to see. The kinds of behaviours that you don’t want to accidentally change without your explicit consent.

Here at endform, we can run your Playwright end-to-end tests faster than anywhere else.

A new way of working

The day we turned this on, half of our commits to main originated from a first draft written by our Claude agent. It’s a game-changer that allows you to spend less time on boilerplate and more time on architecture and review.

It’s a completely new way of working that you and your team can benefit from, but beware. Take your quality guardrails seriously, and treat code review with the respect it deserves.

Now, go have some fun.