In time, the effect of AI agents on the software engineering industry will reveal itself. We will land somewhere between a fully autonomous and a less autonomous world. One thing is clear, not adopting AI is making you slower relative to your competitors. Some companies are going as far as making hiring decisions based on engineers willingness to adopt AI.

Positioning yourself and your organisation for long-term success isn’t straightforward. Here, we will give you our best advice for choosing an AI strategy for web-based end-to-end testing. We will try to guide your decision through larger observed trends in the software engineering industry, rather than making it quality assurance or end-to-end testing specific. You can even apply these ideas to other areas of your software development or testing strategy.

Modeling the AI tooling space

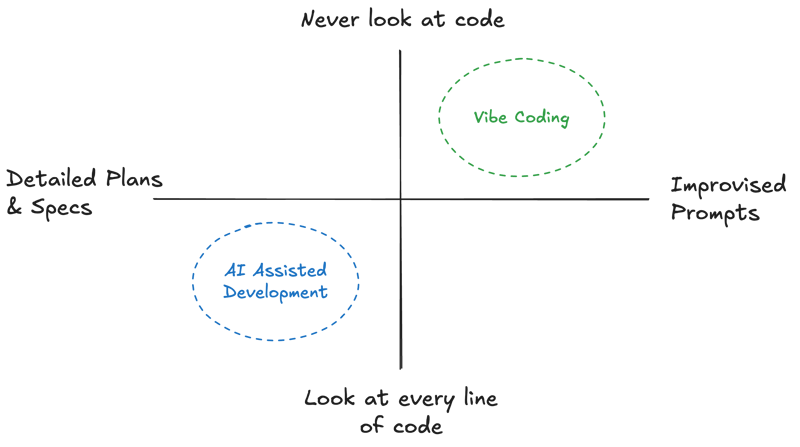

I like to use this model from Matt Pocock as a way of helping to understand the trade-offs between different AI approaches that exist on the market today.

Here, we distinguish between how much we interact with inputs and the outputs of AI models. For example:

- Vibe Coders: “I have no idea what it does, and I give it vague instructions”

- AI-assisted engineers: “I actively vet the output of the AI, and I give it very specific instructions”.

Sceptics of vibe coders will happily point out that vibe coded projects always plateau, and are very difficult to scale over time. Garbage in, garbage out - so they say.

AIs and end to end testing on the web

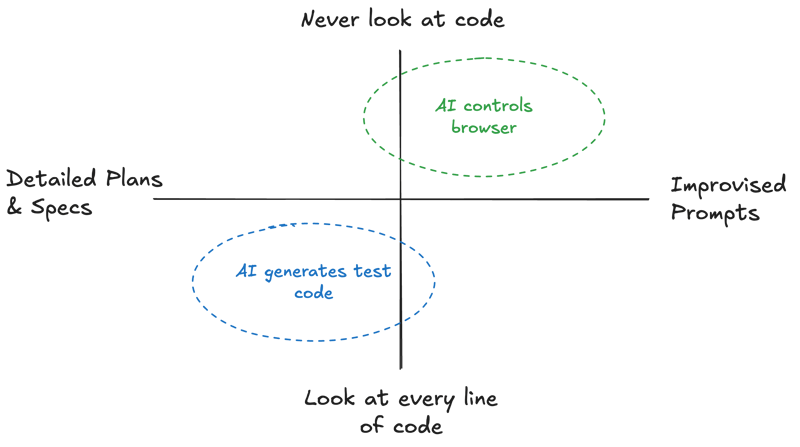

Let’s use the same model to describe the AI landscape for automated web application testing.

Similarly to the vibe coding trap, we could craft vague prompts asking AI agents to test our application with decent but also varied results. Like asking a Playwright MCP server to “make sure that the checkout page works” - maybe a happy path test works, but it doesn’t know that in your context, it is worth testing several currencies or shipping methods. We can call this “AI-first” testing - where total control of the browser is given to the AI agent, and natural language is the only input.

The safest or most correct testing with AI variant is the “AI-assisted” approach. We can maintain both documentation that describes the behaviors that we want to test alongside the output, like the generated Playwright test code. We can spend more time explaining that in our context, the ability to choose different colours of shoe is critical.

While AI-assisted testing might be more correct - it comes with a cost. You need experienced technical staff for it to work. On the surface, browser agents that don’t require experience, salaries and benefits seem cheaper, but what is the difference in quality over time worth? Does “AI-first” testing plateau the same way as vibe coded apps do?

Cost of control

The key difference between these two approaches is the intermediate representation of the test. Do we have access to review and modify code that describes the intent of the test? Or does the AI agent drive the test by itself? We can describe the cost of reviewing and maintaining this intermediate representation as the “cost of control”.

Here are some important tradeoffs to consider when thinking about your cost of control:

Transparency & Collaboration: How much do you value your existing code reviewing processes and culture? Are your code reviews your primary mechanism for ensuring a quality bar? Is it important to have a clear sequence of events through git for scenarios like postmortems?

Reproducibility: How consistent should your results be across runs? AI-first tests may be less brittle to small UI changes, but you give up control over how those changes are interpreted.

Cost & Iteration Speed: How often do you want to run your suite (every commit vs. once a day)? AI-first suites that require running models on the side of the browser are more expensive and slower to run. It may be too expensive to run the full suite on every change, with more customers opting to run the full suite on a daily basis.

Extensibility: Owning your own intermediate representation makes it easier to bring your existing tests elsewhere. You can build your context and expectations into code and documentation that you own, which also happens to be easier to extend with your tools and workflows.

By banking on strategy decisions that lean towards fully autonomous AI agents, you must have the belief that these agents will outperform yourself in the task at hand, completely independently, in the near future. When your agents are also locked into a vendor, you have to believe that your vendor will perform exactly according to your wishes, consistently, for a long time.

Flexible and future proof strategies

The safest bets in software engineering are open source, extensible ecosystems rather than individual tools. Controlling your end to end tests by owning the intermediate representation as Playwright code makes it easier to choose and change the tools you use over time.

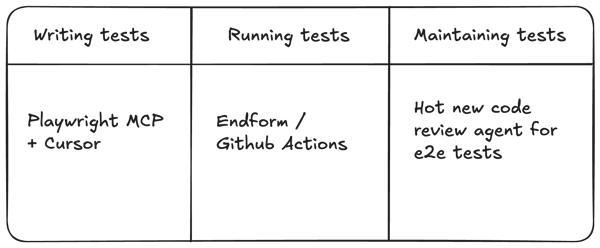

Here’s a sketch of a strategy for using AI with end to end tests:

Look for tools where you can leverage your existing suite rather than rewriting tests from scratch for each separate product as they come and go. This week you might be integrating the best new tool on the market for automatically improving your tests. The week after it might be included in the IDE you use every day. Choosing an ecosystem over a single product is the best way to find stability in an ocean of AI hype.

Learning from our surroundings

The widely predicted disappearance of software engineers when AI coding tools first appeared hasn’t happened. Instead, the role of the engineer has shifted: it’s less about typing and more about understanding, planning, and reviewing code. The teams that succeed are the ones combining human expertise with AI acceleration.

The same lesson applies to testing. What we are really chasing is clarity and a shared understanding of a product — enabling engineers and testers to keep building without slowing down. We can certainly reach that value more productively with AI, but without ownership, we won’t reach the same level of confidence in our tests.

Rethink your AI strategy. Choose an ecosystem that gives you the flexibility to change and grow with the market. Our chosen intermediate representation for the end to end testing space is Playwright. What’s yours?

Endform is an end to end test runner for Playwright tests. If you’re interested in running your end to end tests faster than anywhere else, sign up for our waitlist!